Review calls for responsible use of metrics in UK research

Metrics should support, not supplant, expert judgement in research assessments, an independent review has concluded. The Metric tide review argues that metrics or indicators can be a force for good but only if they’re used responsibly. And wider use of metrics can support a shift to a more open, accountable research system.

The review was commissioned last year by the Higher Education Funding Council for England (Hefce), which is preparing to launch a consultation this autumn on the next assessment of UK universities, the Research Excellence Framework (REF). The outcome of REF is extremely important to universities as it affects the distribution of around £1.6 billion of funding each year.

‘Some indicators can be informative but you must always understand their context and they should only be used to inform peer judgement,’ says Stephen Curry, a structural biologist at Imperial College London, UK, and review panel member. ‘They may have value, but the core message is they need to be used responsibly.’

The report notes that powerful currents are whipping up the metric tide: the growing pressure for evaluation of public spending, the demand by policymakers for better strategic intelligence on research quality and impact, and rising competition within and between institutions.

Cost cutting

The report states that if the underlying data infrastructure can be improved, the cost of gathering metrics should fall and this could ease peer review efforts in some areas. It therefore recommends that indicator data be standardised and harmonised.

The review was accompanied by a substantial literature review and an analysis of the REF2014 outcomes. ‘REF is a burdensome exercise, so we looked at whether we could use metrics to ease the burden of evaluation,’ says Curry. ‘Unfortunately the finding is: probably not.’

Individual metrics gave significantly different outcomes from the REF peer review process, and cannot provide like-for-like replacement. A similar effort to predict REF success based on metrics was also ‘wildly inaccurate’. Even with established bibliometric indicators the correlation with REF outcomes was ‘surprisingly weak’, notes chair of the review James Wilsdon, professor of science and democracy at the University of Sussex, UK, who advocates the use of the term indicators over metrics. ‘Quantitative data can enrich and be included in the REF, but there is no sensible prospect in the near future of moving away from a predominantly peer review REF to a metric REF,’ he adds.

The metrics used by higher education institutes, funders and publishers need to fit together better, the review notes. There is a need for greater transparency in their use of indicators, particularly for university rankings and league tables. These were pilloried in the report, and the misuse of journal impact factors, h-indices and grant-income targets to gauge quality also came under fire.

Ethical measures

Individual researchers should be conscious of their own behaviour too when using metrics, Wilson says. Responsible metrics means they should be robust, transparent, diverse and humble. Avoid slapping an h-index on your CV or being the person who brings up journal impact factors on a panel, advises Wilsdon. ‘Go the extra mile, read the paper.’

Other non-traditional metrics such as downloads, social media shares and other measures of impact ‘can be useful tools for learning about how research is disseminating and playing out beyond academia’, says Curry. But he adds that they are ‘not a reliable measure of research quality’.

Others argue quantitative data has a role to play. ‘There is room for both quantitative and qualitative measures. To us they’re definitely two sides of the same coin,’ comments Euan Adie, founder of Altmetrics.com. ‘The temptation might be to say, well, metrics can be abused, so therefore we should stick to just peer review, which is also not without problems. That’s throwing the baby out with the bathwater. We trust people to make good peer review judgments – surely with the right help and approach we can trust them to use metrics sensibly too.’

An undercurrent throughout the review is the concern that an obsession with counting everything is undermining research. Wilsdon says the research community is polarised on the issue, with some supporting a move to metrics to save time, while others vehemently oppose them.

‘It is astonishing to me that Hefce even had to do this report in some respects,’ comments Lee Cronin at the University of Glasgow, UK. ‘Do you judge an artwork by the number of strokes in the painting?’ He welcomes the importance of peer review and context emphasised in the report and is proud of the UK’s attitude. ‘We are competitive because of our quality peer review, but endangered because blue skies research is critically underfunded,’ says Cronin.

He worries that metrics will be abused owing to shortfalls in UK science funding and will encourage conservatism in research. ‘Do we want to fund safe bet science? I worry that metrics are pushing young people into writing grant proposals where the application comes first.’

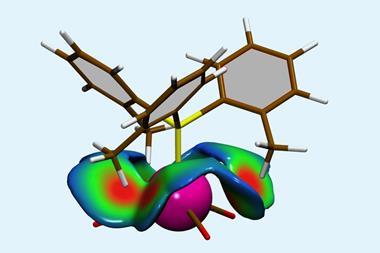

Some metrics like impact factor are derided as useless, but computational chemist Henry Rzepa at Imperial College London, UK, believes a game-changer is being overlooked. ‘Perhaps the most valuable application of these metrics would be for research data.’ The Engineering and Physical Sciences Research Council (EPSRC) mandated in May that research data must be properly managed, including having a digital object identifier (DOI). ‘Once you have a DOI for data you can apply metrics to it in the same way you can apply to a journal article, except the meaning is rather different,’ says Rzepa.

Almost everyone accepts concerns about metrics are warranted. ‘People are taking shortcuts and using indicators in a rather crass and inappropriate way to drive through decisions,’ says Wilsdon. The review aims to assist universities, funders and individual researchers to use them responsibly.

A discussion blog has been set up and a ‘Bad Metrics’ prize will be awarded every year to an institution for egregiously bad use of metrics. ‘That kind of name and shame function is quite effective as a stick to encourage people to think twice before doing this,’ Wilsdon says.

No comments yet